IVILAB Research Projects

PSI objects rooms plants neurons fungus vision_and_language trails SLIC tracking parts

See also: IVILAB Undergraduate research

| Go to | Kobus Barnard 's research page | Kobus Barnard 's Home Page | See also: Research with undergraduates |

|

|

IVILAB Research ProjectsPSI objects rooms plants neurons fungus vision_and_language trails SLIC tracking parts

See also: IVILAB Undergraduate research

|

|

Inferring human activity from images using conventional approaches (e.g.,

classification based on low level features) is difficult because meaningful

activity concepts are abstract at the semantic level and exhibit a very wide

range of visual characteristics. Consider the verb "follow." A person may follow

another person, a car may follow a motorcycle, a dog may follow its owner, so

on. Further, whether the activity "follow" is actually better described as

"chase" includes intent which is also not directly linked to low level features.

In this project the goal is to develop methods for deep understanding of

activity from video.

Persistent Stare through Imagination (PSI) |

|

|

This project is developing approaches for learning stochastic 3D geometric

models for object categories from image data. Representing objects and their

statistical variation in 3D removes the confounds of the imaging process, and is

more suitable for understanding the relation of form and function and how the

object integrates into scenes.

|

|

|

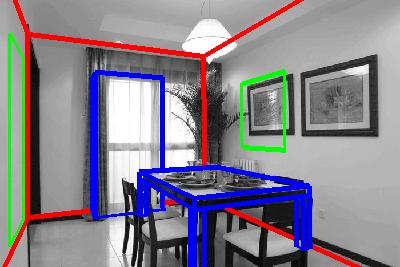

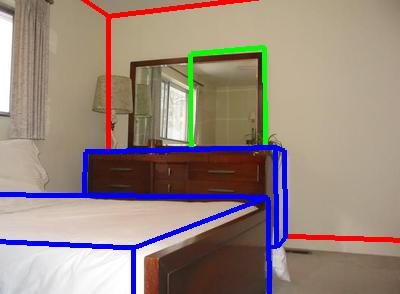

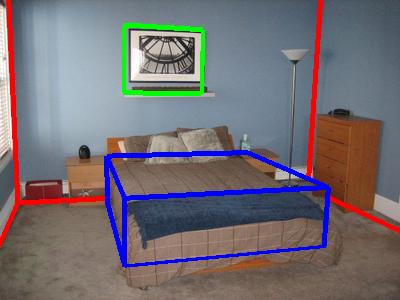

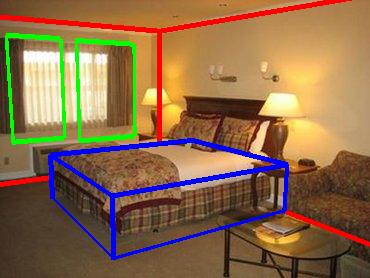

In conjunction with the previous discussed project, we would like to understand

scenes in geometric and semantic terms---what is where in 3D. Doing this from a

single 2D image involved inferring the parameters of the camera, which can be

done assuming a strong model. In this case we adopt the Manhattan world

assumption, namely that most long edges are parallel to three principle axes.

Different from other work, we develop a generative statistical model for scenes,

and rely only on detected edges for inference (for now).

|

|

|

Quantifying plant geometry is critical for understanding how subtle details in

form are caused by molecular and environmental changes. Developing automated

methods for determining plant structure from images is motivated by the

difficulty of extracting these details by human inspection, together with the

need for high throughput experiments where we can test against a large number of

variables.

|

|

|

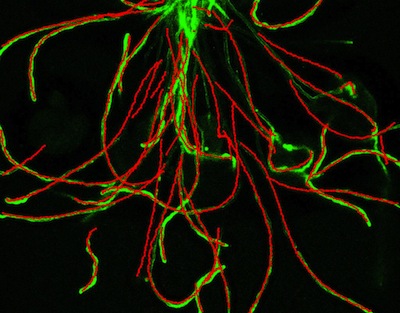

We are developing models for the structure of Drosophila brain neurons grown in

culture. Genetic mutations and/or environmental factors can have a big impact on

neuron morphology, which in turn effects their function. Automated methods for

quantifying the neuron structure will enable large scale screens for drugs that

compensate for genetic defects or mitigate the effects of toxins.

|

|

|

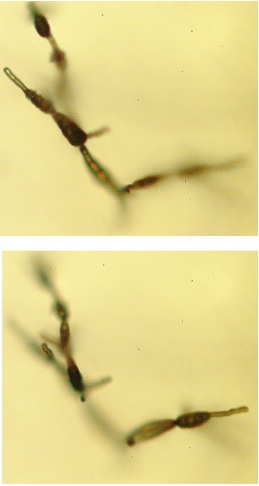

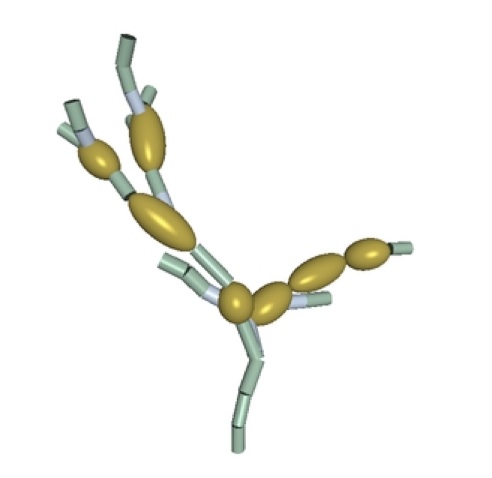

Our goal in this project is to learn and fit structure models for microscopic

filamentous fungus such as those from the genus Alternaria. The data are image

sequences taken at stepped focal depths (stacks). The left hand image pair shows

two images out of 100. Notice the significant blur due to the limited depth of

field. We deal with the blur and exploit the information hidden in it by

fitting the microscope point spread function together with the model. The right

hand images shows the fit model with the cylinders corresponding to hyphae, and

the ellipsoids representing spores.

|

|

|

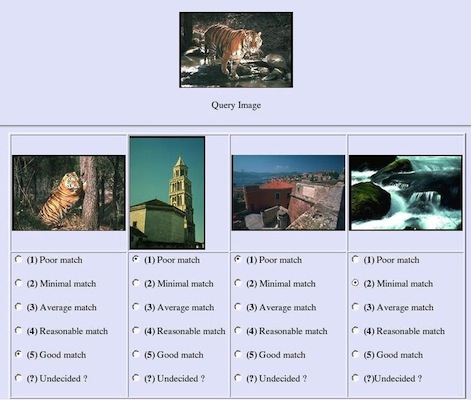

Image keywords and captions provide information about what is in the image, but

we do not know which words correspond to which image elements. Alternatively,

words may provide complementary non-visual information. Dealing with this

correspondence problem enables using such data as training data

for image understanding and object recognition, and can improve multi-modal

searching, browsing, and data mining.

|

|

|

The image to the right shows a satellite photo of a trail with an automatically

extracted trail overlaid in red (end points are provided). In this image the

method works well, but in general, this is a difficult task as trails are often

faint, obscured by trees, and similar in appearance to dry stream beds. Trails

both deteriorate over time and also appear due to human activity. The volatile

nature of trails makes extracting them from satellite photographs important. In

this work we exploit GPS tracks of travellers on trails to train and validate

automated methods for extracting them. This work began with

Scott Morris's

PHD work, and is being extended by

Andrew Predoehl.

|

|

|

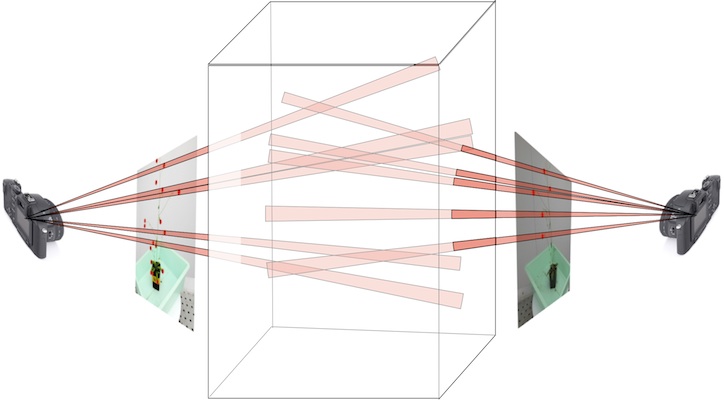

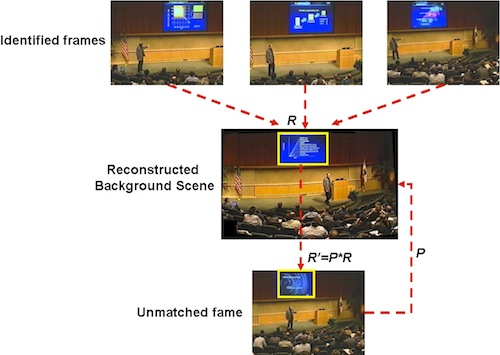

The goal of this project is to make instructional video more accessible to

browsing and searching. A key tool for this is to match slide images from

presentation files (PPT, Keynote, PDF) to videos that use them. We approach this

problem by using SIFT keypoint matching under a consistent homography. The

figure to the right shows how we can use initial matches to build a background

model, which in turn helps us match more difficult frames because we now know

where the slide image must be.

|

|

|

Simultaneously tracking many objects with overlapping trajectories is hard

because you do not know which detections belong to which objects. The vision lab

has developed a new approach to this problem and has applied it to several kinds

of data. For example, the image to the right shows tubes that are growing out of

pollen specs (not visible) towards ovules (out of the picture) in an

|

|

|

CAD models provide the 3D structure of many man made objects such as machine

parts. This projects aims to find objects in images based on these models.

However, since the data is most readily available as triangular meshes, 3D

features that are useful for matching 2D images must be extracted from mesh

data. Having done that, we need fast methods to match to them to images.

Applications of this work include identifying parts in machinery maintenance

facilities and finding possible manufactured parts based on user sketches.

|

|

Inactive and Subsumed Projects

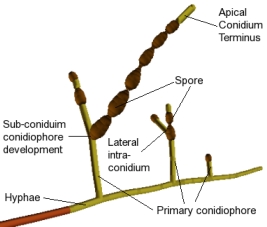

To the right is a labeled model of the fungus Alternaria generated by a

stochastic L-system built by undergraduate researcher

Kate Taralova.

For more information, follow this

link. This project continues as part of the plant modeling project.

|

|

Evaluation methodology for automated region labelingLink to: 1) IJCV paper and 2) Data and Code. Funding provided by TRIFF. |

|

Link to:

1)

CVPR'05 paper

and

2)

Data.

|

|

The endeavour to build the Large Synoptic Survey Telescope (LSST) project is a

huge undertaking. See

lsst.org

for details. Not surprisingly, a significant part of the system is the data

processing pipeline. We have worked on the temporal linking observations of

objects assumed to be asteroids to establish orbits and build data systems for

querying the emerging time/space catalog. One of the goals of this research is

to help identify potentially hazardous asteroids. This is joint work with

Alon Efrat,

Bongki Moon,

and the many individuals working on the

LSST project.

|

|